Why do we rely on specific information over statistics?

Base Rate Fallacy

, explained.What is the Base Rate Fallacy?

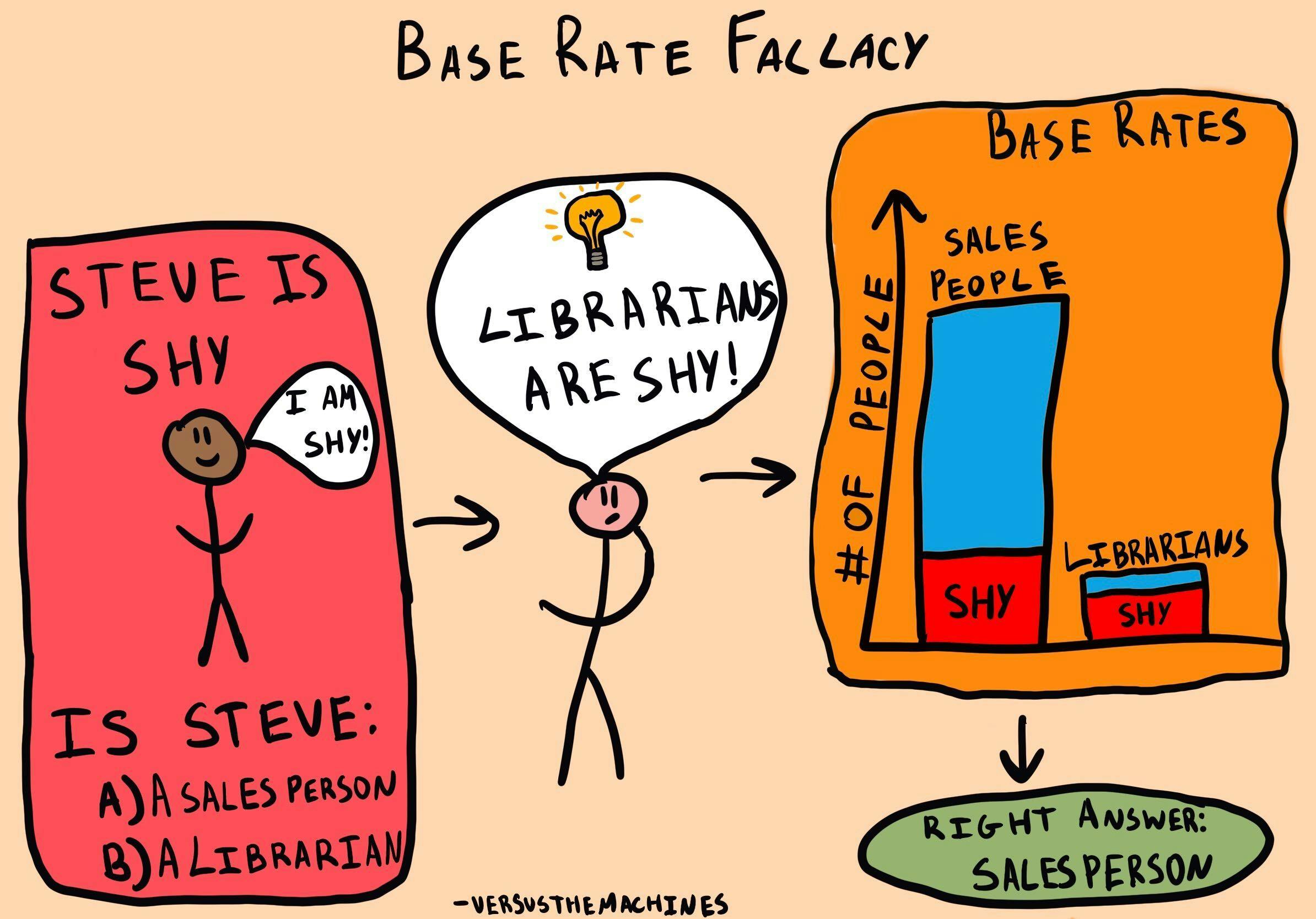

When provided with both individuating information, which is specific to a certain person or event, and base rate information, which is objective, statistical information, we tend to assign greater value to the specific information and often ignore the base rate information altogether. This is referred to as the base rate fallacy or base rate neglect.

Where this bias occurs

If you’ve ever been a college student, you probably know that there are certain stereotypes attached to different majors. For example, students in engineering are often viewed as hardworking but cocky, students in business are stereotypically preppy and aloof, and arts students are activists with an edgy fashion sense. These stereotypes are wide generalizations, which are often way off the mark. Yet, they are frequently used to make projections about how individuals might act.

Renowned behavioral scientists Daniel Kahneman and Amos Tversky once conducted a study where participants were presented with a personality sketch of a fictional graduate student named Tom W. They were given a list of nine areas of graduate studies and told to rank them in order of likelihood that Tom W. was pursuing studies in that field. At the time, far more students were enrolled in education and the humanities than in computer science. However, 95% of participants said it was more likely that Tom W. was studying computer science than education or humanities. Their predictions were based purely on the personality sketch—the individuating information—with total disregard for the base rate information.1

As much as that one person in your history elective course might look and act like the stereotypical medical student, the odds that they are actually studying medicine are very low. There are typically only a hundred or so people in that program, compared to the thousands of students enrolled in other faculties like management or science. It is easy to make these kinds of snap judgments about people since specific information often overshadows base rate information.

Debias Your Organization

Most of us work & live in environments that aren’t optimized for solid decision-making. We work with organizations of all kinds to identify sources of cognitive bias & develop tailored solutions.

Related Biases

Individual effects

The base rate fallacy can lead us to make inaccurate probability judgments in many different aspects of our lives. As demonstrated by Kahneman and Tversky in the previous example, this bias causes us to jump to conclusions about people based on our initial impressions of them.2 In turn, this can lead us to develop preconceived notions about people and perpetuate potentially harmful stereotypes.

This fallacy can also impact our financial decisions, by prompting us to overreact to transient changes in our investments. If the base rate statistics show consistent growth, it is likely that any setbacks are only temporary and that things will get back on track. Yet, if we ignore the base rate information, we may feel inclined to sell, as we may predict that the value of our stocks will continue to decline.3

Systemic effects

The individual effects of base rate fallacy can accumulate into significant challenges when making probability judgments about others, such as a doctor diagnosing a patient. In their 1982 book, Judgment Under Uncertainty: Heuristics and Biases4, Kahneman, and Tversky cited a study that presented participants with the following scenario: “If a test to detect a disease whose prevalence is 1/1000 has a false positive rate of 5%, what is the chance that a person found to have a positive result actually has the disease, assuming you know nothing about the person's symptoms or signs?”

Half the participants responded 95%, the average answer was 56%, and only a handful of participants gave the correct response: 2%. Although the participants in this study were not physicians themselves, this example demonstrates how important it is that medical professionals understand base rates do not commit this fallacy. Forgetting to take base rate information into account can have a significant toll on the patient’s mental well-being, and may prevent physicians from examining other potential causes, as 95% odds seem pretty certain.

How it affects product

The base rate fallacy comes into play during online shopping. We may be more inclined to purchase a product based on one detailed review that resonates with us, rather than its overall ratings.

Imagine scrolling on social media and stumbling upon your favorite influencer promoting a skin care product. We might trust their post as proof the product works—after all, the ingredients are “all-natural,” and their face is glowing! Meanwhile, we might ignore the product’s two-star review on Amazon or the fact it had a sudden drop in sales quickly after its release. Put simply, that one review sticks in our minds because it is individualized information. But the overall reviews slip from our consciousness since they are individualized base rate information, misleading our purchase.

The base rate fallacy and AI

As AI rises in popularity, it’s impossible to escape the passionate debates about its usefulness. We might choose whether to embrace or reject machine learning based on others’ testimonies, regardless of the statistical evidence on how the software is actually performing. For example, hearing a coworker rave about how ChatGPT helped them write a project proposal might make us view it as a great writing tool, even if the base rate information reveals that this isn’t always true. Meanwhile, a skeptical older relative ranting about AI ethical concerns may cause us to ignore the pool of evidence proving otherwise.

Why it happens

There have been a number of explanations proposed for why the base rate fallacy occurs, but two theories stand out from the rest. The first theory posits that it is a matter of relevance: we ignore base rate information because we classify it as trivial and therefore feel like it should be ignored. The second theory suggests that the base rate fallacy results from the representativeness heuristic.

Relevance

Maya Bar-Hillel’s 1980 paper, “The base-rate fallacy in probability judgments”,5 addresses the limitations of previous theories and presents an alternate explanation: relevance. Specifically, we ignore base rate information because we believe it to be irrelevant to the judgment we are making.

Bar-Hillel contends that before making a judgment, we categorize information given to us into different levels of relevance. If something is deemed irrelevant, we discard it and do not factor it into our conclusion. It is not that we are incapable of integrating the information, but rather that we mistake it as not being valuable enough to integrate. This tendency causes us to ignore vital information, value certain information more than we should, or focus only on one source of information when we should be integrating multiple.

Furthermore, Bar-Hillel explains that part of what makes us view certain pieces of information as more relevant than others is specificity. The more specific information is to the situation at hand, the more relevant it seems. Individuating information is, by nature, incredibly specific. As such, we denote it as highly relevant. On the other hand, base rate information is very general. For this reason, we categorize it as having little relevance. Together, these varying levels of specificity cause us to only consider individuating information when making decisions. Little do we realize is that base rate information is often a better indicator of probability, compromising the accuracy of our judgments.

Representativeness

Bar-Hillel contends that representativeness alone is insufficient at explaining why the base rate fallacy occurs, as it cannot account for all contexts.6 That being said, representativeness is still a factor contributing to the base rate fallacy, specifically in cases like the Tom W. study described by Kahneman and Tversky.7

Heuristics are mental shortcuts we use to facilitate judgments when decision-making. In particular, the representativeness heuristic, introduced by Kahneman and Tversky, describes our tendency to judge probability based on the extent to which something is similar to the prototypical exemplar of the category it falls into.

Let’s break down how this works. In general, we mentally categorize objects and events, grouping them based on similar features. Each category has a prototype: the average example of all the members belonging to that category. The more the something resembles that prototype, the more representative of that category we judge it to be—including its likelihood.8 The representativeness heuristic gives rise to the base rate fallacy when we view an event or object as extremely representative and make a probability judgment based solely on that without stopping to consider base rate values.

Going back to the Tom W. example, participants only inferred his field of study from the appearance of the cartoon. Deeming him representative of a computer science graduate student, participants ranked him as most likely to be studying that field, rather than in programs with far greater enrollment rates. Since there were far more students in both education and humanities at that time, it was far more likely that he was in one of those fields. Yet, representativeness caused participants to overlook the base rate information, resulting in inaccurate probability predictions.

Why it is important

There are cases where relying solely on individuating information helps us understand outliers—that is, anomalies outside the realm of probability. But the overwhelming majority of the time, ignoring base rate information altogether leads to poor judgments. After all, we are basing our predictions on stereotypes instead of statistics.

Of course, this is not a huge deal when we guess someone’s major or profession wrong. However, the base rate fallacy leads to unfounded assumptions about individuals that can have real consequences. For example, we might suspect that our colleague is more likely to commit a crime based on their race or religion, even when the statistics unveil that this is anything but the truth. To counter prejudices and ensure we treat everyone fairly, we must learn how to fight back against the base rate fallacy once and for all.

How to avoid it

To avoid committing the base rate fallacy, we need to work on paying more attention to the base rate information available to us, as well as recognizing that individuating information is not a very reliable predictor of future behavior. Both of these require us to be more intentional when assessing the probability that a given event will occur. It’s easier to fall back on effortless, automatic processes, which make decision-making much easier. However, this substantially increases the risk of error. By being aware of this fallacy and actively combating it, we can reduce the frequency with which we commit it and better understand the world around us.

How it all started

It’s impossible to discuss the base rate fallacy without mentioning Kahneman and Tversky. Their 1973 paper, “On the Psychology of Prediction,”9 describes how the representativeness heuristic can lead us to commit the base rate fallacy. They illustrated this through the previously mentioned Tom W. study, in which participants made their predictions based on the personality sketch and forgot to account for the number of graduate students enrolled in each program.

Another early explanation of the base rate fallacy is Maya Bar-Hillel’s 1980 paper, “The base-rate fallacy in probability judgments”.10 She describes the fallacy as “people’s tendency to ignore base rates in favor of, e.g., individuating information (when such is available), rather than integrate the two.” The paper points out the limitations of Kahneman and Tversky’s representativeness explanation, and provides an alternate theory explaining the base rate fallacy.

Specifically, Bar-Hillel pinpoints perceived relevance as the underlying factor. She suggests that the more specific information is, the more relevance we assign to it. As such, we attend to individuating information because it is specific, and therefore considered relevant. On the other hand, we ignore base rate information because it is general, and therefore deemed less relevant.

Example 1 – The cab problem

This classic example of the base rate fallacy is from Bar-Hillel’s foundational paper.11 First, participants learn the following base rate information. There are two cab companies in a fictional city named after the color of their taxis: the “Green” company and the “Blue” company. Of all the cabs in the city, 85% are blue and 15% are green.

Then, the researchers told participants about a hypothetical scenario where a witness identifies a cab involved in a hit-and-run as green. To assess their reliability, the court tests the witness’s ability to discriminate between blue and green cabs. The results reveal that the witness can accurately distinguish the colors 80% of the time but confuse them 20% of the time. After hearing this scenario, participants predicted the likelihood that the cab involved in the hit-and-run was actually green.

Most participants guessed that the probability that the witness correctly identified a green cab is 80%. However, everyone who gives that answer is subject to the base rate fallacy. Remember that the base rate information revealed that only 15% of the cabs in the city are green, making the actual probability that the witness was correct 41%. This probability must be calculated using inferential statistics, which consider both the percentage of each color cab in the city and the likelihood that the witness correctly discriminated between the colors at night.

Example 2 – How much will you donate?

In their 2000 paper, “Feeling ‘holier than thou’: are self-serving assessments produced by errors in self- or social prediction?”,12 Nicholas Epley and David Dunning discovered that we have a tendency to commit the base rate fallacy when predicting our own behavior because we have access to ample individuating information about ourselves. During their research, they gave university students five dollars and asked them to predict how much of that money they would donate to charity, as well as how much the average person would donate. After their initial predictions, participants learned about the donations of 13 of their peers, one by one. Participants were allowed to revise their predictions after the donations of three of their peers were revealed, then again after seven were revealed, and once more after the thirteenth was revealed.

In general, participants gauged their own generosity as superior to their peers. At the start of the study, the average prediction for one’s own donation was about $2.75, while the average prediction for their peers was about $2.25. The actual average amount donated was $1.50. At the three time points where they were given the chance to revise their predictions, participants adjusted their predictions of their peers’ donations to match the base rate information they had acquired. After seeing all 13 donations made by their peers, the average prediction of peers’ donations closely resembled the actual average donation amount of $1.50. But interestingly enough, participants’ predictions for themselves did not change, even as they gained more base rate information.

The reason why participants took base rate information into consideration when making predictions about their peers is that they did not have access to individuating information about any of these people. As a result, they had to rely on base rate information alone. However, this was not the case when making predictions about themselves. Participants used their own personality and past behaviors as individuating information in making the prediction about how much money they would donate. Since we tend to value individuating information more than base rate information, they did not adjust their predictions for themselves as they gained access to more base rate information.13

This demonstrates that, when no specific individuating information is available, we will use base rate information in making predictions. However, as soon as we have access to that individuating information, we latch onto it and use it instead.

Summary

What it is

The base rate fallacy refers to how we tend to rely more on specific information than we do statistics when making probability judgments.

Why it happens

There are two main factors that contribute to the occurrence of the base rate fallacy. One is the representativeness heuristic, which states that the extent to which an event or object is representative of its category influences our probability judgments, with little regard for base rates. Another is relevance, which suggests that we consider specific information to be more relevant than general information, and therefore selectively attend to individuating information over base rate information.

Example 1 - The cab problem

A classic explanation for the base rate fallacy involves a scenario in which 85% of cabs in a city are blue and the rest are green. When a cab is involved in a hit and run, a witness claims the cab was green, however later tests show that they only correctly identify the color of the cab at night 80% of the time.

When asked what the probability is that the cab involved in the hit-and-run was green, people tend to answer that it is 80%. However, this ignores the base rate information that only 15% of the cabs in the city are green. When taking all the information into consideration, crunching the numbers shows that the likelihood that the witness was correct is actually 41%.

Example 2 - How much will you donate?

In another study, participants were asked how much out of the five dollars they were given would they donate to a given charity. They were asked to make the same prediction about their average peer. Next, the participants were presented with the actual donations of 13 other peers and given the chance to adjust their predictions. They switched their predictions of their peers to match the base rate information but did not change their predictions for themselves due to their overreliance on individuating information.

How to avoid it

To avoid committing the base rate fallacy, we need to take a more active approach to assessing probability by paying more attention to the base rate information available to us and recognizing that individuating information is not a very reliable predictor.

Related TDL articles

Why do we use similarity to gauge statistical probability?

As discussed above, Kahneman and Tversky hypothesized that the base rate fallacy is caused by the representativeness heuristic, where we evaluate how likely an event is based on how similar it is to an existing mental prototype. Read this article to learn more about the representativeness heuristic and other types of faulty predictions it might lead us to make.

Why are we overconfident in our predictions?

The base rate fallacy is one of the main factors causing the illusion of validity, which is our tendency to be overconfident in the accuracy of our judgments, specifically in our predictions. Read this article to discover more about which kinds of situations that the illusion of validity impacts us in, as well as what we can do to avoid it.