Why do we underestimate how long it will take to complete a task?

Planning Fallacy

, explained.What is the Planning Fallacy?

The planning fallacy describes our tendency to underestimate the amount of time it will take to complete a task, as well as the costs and risks associated with that task—even if it contradicts our experiences.

Where this bias occurs

John, a university student, has a paper due in one week. John has written many papers of a similar length before, and it generally takes him about a week to get it done. Nonetheless, as he is dividing up his time, John is positive that he can finish the assignment in three days, so he puts off starting. In the end, he doesn’t have the paper finished in time, and needs to ask for an extension.

Debias Your Organization

Most of us work & live in environments that aren’t optimized for solid decision-making. We work with organizations of all kinds to identify sources of cognitive bias & develop tailored solutions.

Related Biases

Individual effects

Just as the name suggests, the planning fallacy can lead to poor planning, causing us to make decisions that ignore the realistic demands of a task (be it time, money, energy, or something else). It also leads us to downplay the elements of risk and luck; instead, we focus only on our own abilities—and an overly optimistic assessment of our abilities at that.

Systemic effects

The planning fallacy affects everybody, whether they are a student, a city planner, or the CEO of an organization. When it comes to large-scale ventures, from disruptive construction projects to expensive business mergers, the livelihoods of many people (not to mention a whole lot of money) are at stake, and there are widespread economic and social consequences of poor planning.

How it affects product

The planning fallacy can create issues at any stage of product development. For many companies, a major concern is budgeting new ventures. Whether it's a need for additional resources, changes in material costs, or unexpected technical challenges, costs can escalate far beyond initial budgets.

Though findings are mixed, various techniques have been proposed to avoid the planning fallacy during product development. Instructing those involved in a particular project to imagine both the “best case” as well as possible pitfalls and obstacles can be effective. However, it is also likely that individuals will still be hesitant to incorporate negative information. More successfully, encouraging individuals to imagine aggregate sets of events rather than making predictions based on a sole instance can mitigate the fallacy’s effects. For example, in the context of a project timeline, a team would want to look at temporal data for similar ventures rather than one past project.13

The planning fallacy and AI

When provided with accurate data, artificial intelligence can help to limit the effects of the planning fallacy. For example, machine learning models can be trained on past project data to predict the duration and costs of similar projects. Researchers have already formed mathematical models in order to account for human biases in planning and optimism.14 The inclusion of AI could not only speed this process along, but provide a more accurate and integrated approach to planning.

While AI can aid in mitigating the planning fallacy, it's crucial to remember that AI models themselves can be overly optimistic or biased based on the data they're trained on. This can create an entirely new version of the planning fallacy wherein we trust AI predictions prior to critically evaluating them – trusting them as an entirely unbiased source.

Why it happens

We prefer to focus on the positive

The planning fallacy stems from our overall bias towards optimism, especially when our own abilities are concerned.11,12 In general, we are oriented towards positivity. We have optimistic expectations of the world and other people; we are more likely to remember positive events than negative ones; and, most relevantly, we tend to favor positive information in our decision-making processes.1,2

When it comes to our own capabilities, we are particularly bad at making accurate judgments. Take one study that asked incoming university students to estimate how they would perform academically compared to their classmates. On average, participants believed they would outperform 84% of their peers.3 Of course, this estimate may have been accurate for some students, but it is mathematically impossible for everybody to be in the top 16%.

All of this means that when we set out to plan a project, we are likely to focus on imagined successful outcomes rather than potential pitfalls, and we are likely to overestimate how capable we (and our team members) are of meeting certain goals. While enthusiasm is certainly important for any venture, it can become problematic if it comes at the expense of being realistic.

We become anchored to our original plan

Anchoring is another type of cognitive bias that plays a big role in the planning fallacy. Coined by Muzafer Sherif, Daniel Taub, and Carl Hovland, anchoring is the tendency to rely too heavily on initial information when we are making decisions.6 When we draw up a plan for a project, we are biased to continue thinking about those initial values—deadlines, budgets, and so on.

Anchoring is especially problematic if our original plans were unrealistically optimistic. Even if our initial predictions were massively inaccurate, we still feel tethered to those numbers even as we try to reassess. This leads us to make insufficient adjustments to our plans as we go along, preferring to make minor tweaks rather than major changes (even if major changes are necessary).

We write off negative information

Even if we do take outside information into account, we have a tendency to discount pessimistic views or data that challenges our optimistic outlook. This is the flip side of our positivity bias: our preference for affirmative information also makes us reluctant to consider the downsides.

In the business world, one example of this is known as competitor neglect, which describes how company executives fail to anticipate how their rivals will behave because they are focused on their own organization.3 For example, when a company decides to break into a fast-growing market, it often forgets to consider its competitors are likely to do the same, leading to an underestimation of risk.

More generally, we often make attribution errors when considering our successes and failures. Whereas we tend to ascribe positive outcomes to our talents and hard work, we attribute negative outcomes to factors beyond our control. This makes us less likely to consider previous failures: we believe those instances were not our fault, and we convince ourselves that the external factors that caused us to fail will not reoccur.4

We face social pressure

Organizational pressure to finish projects quickly and without problems is a major reason that the planning fallacy can be so detrimental. Workplace cultures can often be highly competitive, and there may be costs for individuals who voice less enthusiastic opinions about a project or who insist on a longer timeline than others. At the same time, executives might favor the most overly optimistic predictions over others, incentivizing individuals to engage in inaccurate, intuition-based planning.

Why it is important

The planning fallacy has consequences for both our professional and personal lives, nudging us to invest our time and money in ill-fated ventures and keeping us tethered to those projects for far too long. Research has demonstrated how widespread this bias is: In the business world, it has been found that more than 80% of start-up ventures fail to achieve their initial market-share targets.3 Meanwhile, in classrooms, students report finishing about two-thirds of their assignments later than expected.4

In some fields, such as venture capital, high failure rates are often ascribed to normal levels of risk and seen as proof that the system is working as it should. However, cognitive scientists such as Dan Lovallo and Daniel Kahneman believe that these figures have much more to do with cognitive biases such as the planning fallacy.3 If more people were aware of the planning fallacy, they could take steps to counteract it, such as the ones described below.

How to avoid it

Merely being aware of the planning fallacy is not enough to stop it from happening.5 Even if we have this knowledge, we still risk falling into the trap of believing that this time, the rules won’t apply to us. Most of us strongly prefer to follow our gut, even if its forecasts have been wrong in the past. What we can do is plan around the planning fallacy and build steps into the planning process that can help us avoid it.

Take the outside view

When planning, we use two “types” of information: singular information and distributional information. Singular information is evidence related to the specific case under consideration, whereas distributional information is evidence related to similar tasks completed in the past.5 These perspectives are also referred to as the inside and outside views, respectively.3

Ideally, both singular and distributional information should be considered when planning. The planning fallacy is likely to arise when we rely solely on the inside view—that is when we disregard external information about how likely we are to succeed and instead trust our intuitive guesses about how costly a project will be. Unfortunately, this is exactly what many of us tend to do. Because planning is an inherently future-oriented process, we are inclined to look forward, rather than backward, in time. This leads us to disregard our past experiences.4

Supplementing planning processes with distributional (outside) data, wherever possible, is a solid way to temper expectations for a project.3 If an organization or individual has completed similar projects in the past, they can use the outcomes of those previous experiences to set goals for new ones. It is just as useful to look outside one’s own experiences and see how others have fared. The main point is to make a deliberate effort not to rely solely on intuition.

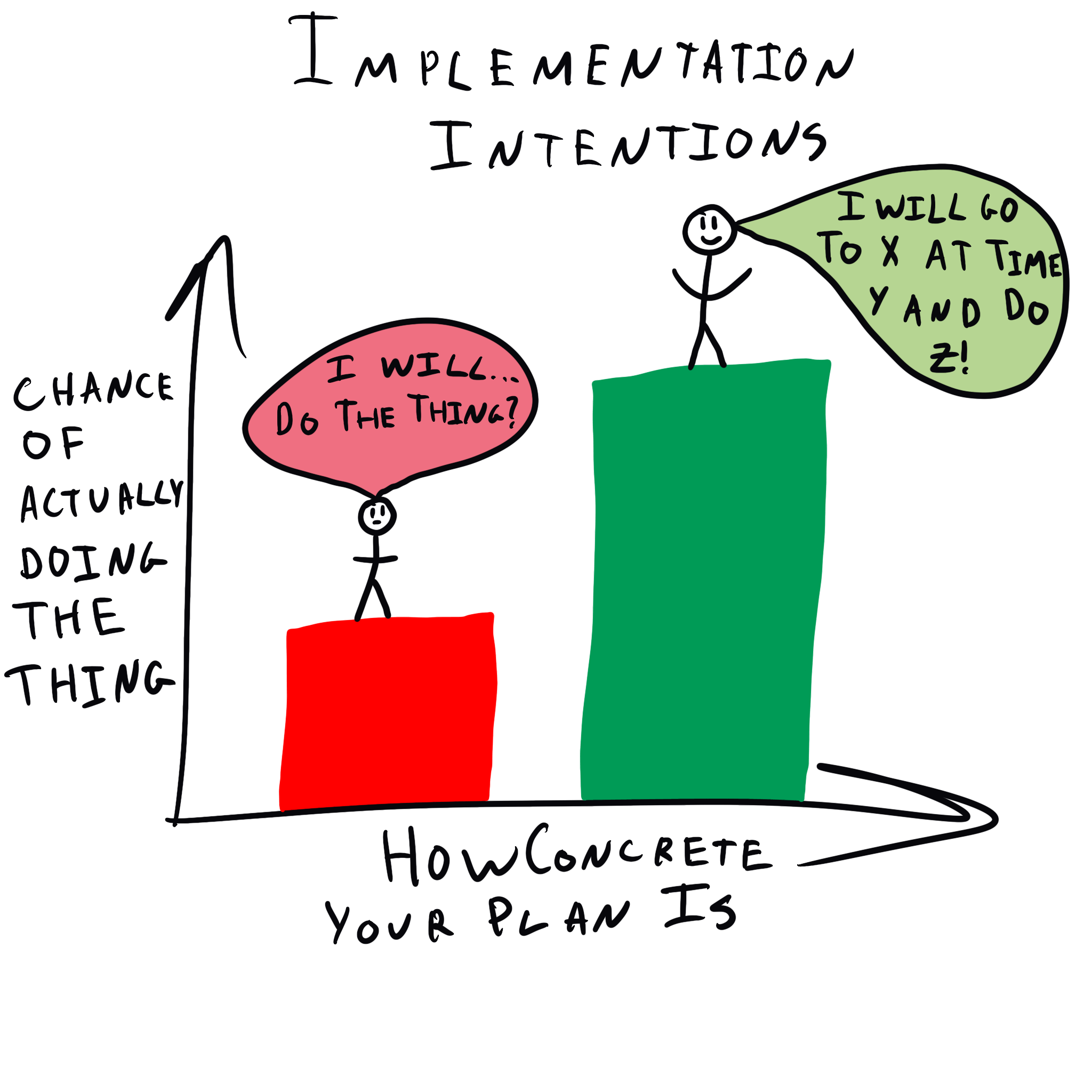

Set implementation intentions

Another strategy for combating the planning fallacy is illustrated by a study from the Netherlands, where study participants were given a writing assignment and told to complete it within a week. The participants were split into two groups. Both groups were instructed to set goal intentions, indicating what day they intended to start writing the paper and what day they believed they would finish. However, the second group also set implementation instructions, specifying what time of day and in what location they would write. They were asked to visualize themselves following through on their plan.

Researchers found that setting specific implementation intentions resulted in significantly more realistic goal-setting.7 At the same time, doing so did not lessen the participants’ optimism; on the contrary, they were even more confident in their ability to meet their goals. They also reported fewer interruptions while they were working. This may be because the process of thinking through the specifics of completing the task at hand resulted in a stronger commitment to following through with one’s plan. These results show that optimism is not incompatible with realism as long as it is combined with a carefully thought-out plan.

Use the segmentation effect for better estimates

A related strategy involves breaking up big projects into their component parts, and then planning for the completion of the smaller subtasks instead of the project as a whole. As bad as we are at estimating the amount of time required for relatively large tasks, research has shown that we are much better at planning for small ones: often, our estimates are remarkably accurate, and at worst, they are overestimates.9 This is, therefore a much safer strategy: In practice, it’s much better to overestimate the amount of time needed for a project than to allocate too little.

How it all started

The planning fallacy was first proposed by Daniel Kahneman & Amos Tversky, two foundational figures in the field of behavioural economics. In a 1977 paper, Kahneman and Tversky argued that, when making predictions about the future, people tend to rely largely on intuitive judgments that are often inaccurate. However, the types of errors that people make are not random, but systematic, indicating that they result from uniform cognitive biases.

In this paper, Kahnemany & Tversky brought up planning as an example of how bias interferes with our forecasts for the future. “Scientists and writers,” they said, apparently drawing from experience, “are notoriously prone to underestimate the time required to complete a project, even when they have considerable experience of past failures to live up to planned schedules.” They named this phenomenon the “planning fallacy” and argued that it arose from our tendency to ignore distributional (outside) data.5

Example 1 – The Sydney Opera House

Now one of the most iconic man-made structures in the world, the construction of the Sydney Opera House was mired with delays and unforeseen difficulties that caused the project to drag on for a decade longer than planned. The original projected cost was $7 million; by the time it was done, it had cost $102 million.4

The Australian Government insisted that construction begin early, wanting to break ground, while public opinion about the Opera House was still favorable and funding was still in place. However, the architect had not yet completed the final plans, leading to major structural issues that had to be addressed down the road, slowing the project down and inflating the budget. One major problem: the original podium was not strong enough to support the House’s famous shell-shaped roof and had to be rebuilt entirely.

Joseph Cahill, the politician who had championed the Opera House, rushed construction along out of fear that political opposition would try to stop it.9 In his enthusiasm, he disregarded criticisms of the project and relied on intuitive forecasts for its costs. While the building, when it was eventually finished, was beautiful and distinctive, it would have been prudent to slow down and take the outside view in planning.

Example 2 – The Canadian Pacific Railway

In 1871, the colony of British Columbia agreed to become a part of Canada. In exchange for joining the Confederation, it was promised that a transcontinental railway connecting BC to Eastern Canada would be completed by 1881.4 In the end, the railway was not completed until 1885 and would require an additional $22.5 million in loans than originally predicted.10

In initially planning the railway, its proponents had apparently not considered how difficult it would be to build through the Canadian Shield, as well as through the mountains of BC. Additionally, there was an inadequate supply of workers to build the railroad in British Columbia. The railroad was eventually built by around 15,000 Chinese laborers, who worked in extremely harsh conditions for very little pay.

Summary

What it is

The planning fallacy describes how we are likely to underestimate the costs of a project, such as how long it will take and how much it will cost.

Why it happens

The human brain is generally biased towards positivity, leading us to make overly optimistic predictions about our projects, as well as to disregard information that contradicts our optimistic beliefs. Once we have set unrealistic plans, other biases, such as anchoring, compel us to stick with them. Pressure from team members, superiors, or shareholders to get things done quickly and smoothly also makes it more costly for us to revise our plans partway through a project.

Example #1 – The Sydney Opera House

The Sydney Opera House is a famous example of the planning fallacy because it took 10 years longer and nearly $100 million more to complete than was originally planned. One major reason was the government’s insistence on starting construction early, despite the fact that plans were not yet finished.

Example #2 – The Canadian Pacific Railway

The Canadian Pacific Railway was finished four years late and more than $20 million over budget, largely because of a failure to plan for the difficulties of building through mountain ranges and over the Canadian Shield.

How to avoid it

The planning fallacy is best avoided by incorporating outside information into the planning process rather than relying solely on intuition. Other strategies, such as setting specific intentions to implement a plan, envisioning oneself carrying out the plan, and segmenting large projects into smaller subtasks, can also help generate more accurate estimates of how costly something will be.

Related TDL articles

Why You Might Not Be Sticking To Your Plans

This article explores a few reasons why people often fail to follow through with their plans, including the planning fallacy. Another potential explanation is the Dunning-Kruger effect, which describes how people with low ability tend to overestimate their own skills. The author also discusses the importance of planning for less-than-ideal scenarios, as well as setting implementation intentions.

The Key to Effective Teammates Isn’t Them. It’s You.

As discussed above, one reason the planning fallacy is so common is because of pressures in the workplace and other environments to overachieve, and to always strive for perfection. This article discusses the importance of being authentically ourselves, at work and elsewhere. When we act in a way that prioritizes genuine social connection over our own egos, we help others feel safe to do the same. By checking in with ourselves and our motivations, asking ourselves whether we are acting in accordance with our values and beliefs, we can create an atmosphere more accepting of imperfections.